From the 10th-13th of September 2017 Andreas Wulff-Jensen and Luis Emilio Bruni attended 2017 Interaction Conference on Mobile Brain-Body Imaging and the neuroscience of art, innovation and creativity.

This conference were about bridging and investigating the gab between art, neuroscience and technology. An interesting endeavor, which is of high interest for the lab. Many interesting new connections and acquaintances were made during the conference which all can be found here

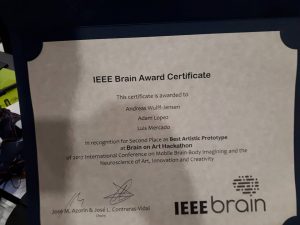

During the conference Andreas was part of the Brain on Art hackathon, which aimed to create BCI prototypes for artistic expressions. Andreas and his team (see the picture below).

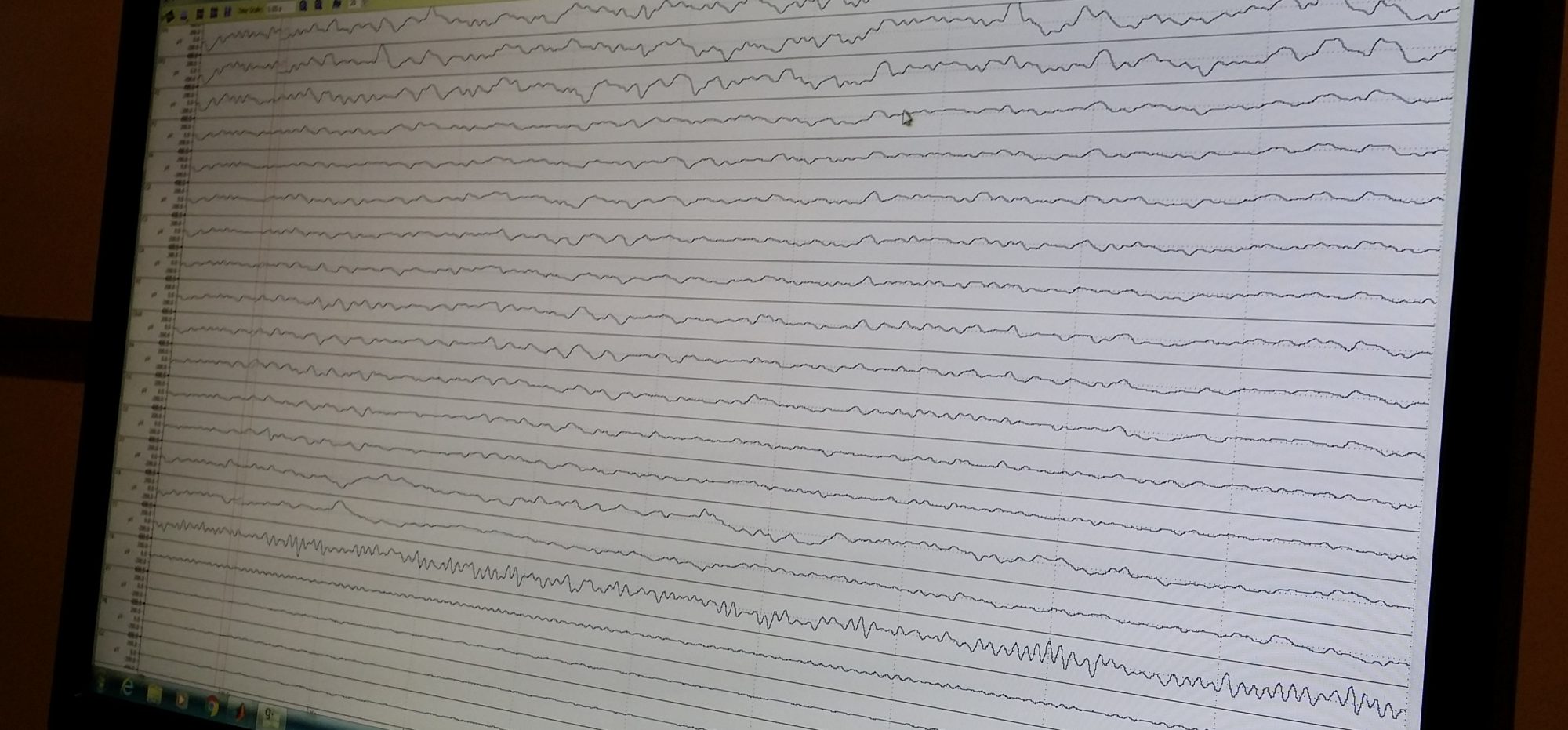

Worked with the challenge of creating a prototype which uses brainwaves to create paintings.

The prototype, segments EEG signals into different frequency bands and associates them with different properties in the application.

– The amplitude of the Delta signal (0.5-3Hz) was associated with the green color channel.

– The amplitude of the Theta signal (3-7Hz) was associated with the X coordinate on the screen.

– The amplitude of the Alpha signal (7-13Hz) was associated with the red color channel.

– The amplitude of the Beta signal (13-24Hz) was associated with the blue color channel

– lastly the amplitude of the gamma signal (24-42Hz) was associated with the Y coordinate of the screen.

The color associations were based on an preliminary ERP test, where the subject saw 30X blue squares, 30X red squares and 30X green squares. This short test was tested on two persons. The preliminary result showed higher alphas with relation to red compared to green and blue. Higher betas when comparing blue with green and red. Lastly higher Deltas when comparing green with blue and red.

Gamma and Theta is arbitrarily connected to the x and y positions in the screen.

With these rules the following application was created.

With the application Andreas and his team won the second best artistic prototype, and a prize of 300 euro.